Datasets

To help computers understand 3D environments, we have worked on collecting and semantically annotating a variety of large scale 3D datasets. We have annotated a) 3D reconstructions of real world environments, b) synthetic scenes that are composed of individual 3D objects (allowing for manipulation and editing), c) large datasets of 3D objects that can be linked to other datasets and used to construct new 3D scenes.

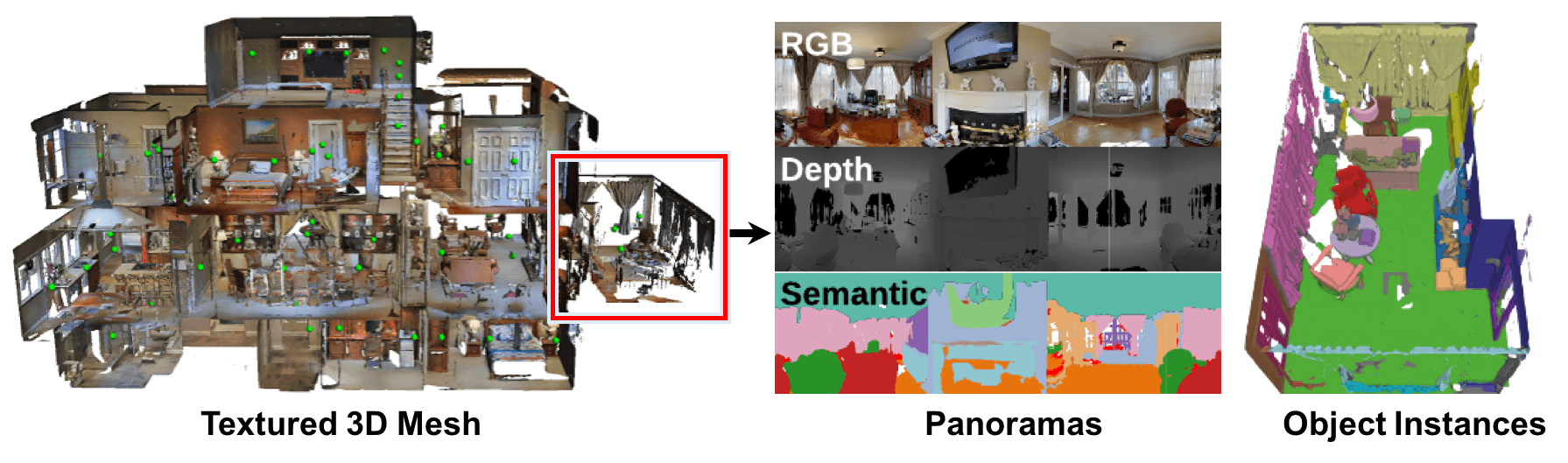

The Matterport3D dataset contains RGBD data and 3D reconstructions of 90 properties captured with Matterport cameras.

The ScanNet dataset consists of 1513 RGBD scans in 707 real world indoor environments that have been reconstructed and semantically annotated. The data can be used for semantic understanding tasks such as semantic labeling of voxels.

The SUNCG dataset consists of over 45K synthetic scenes created by people using 2K models. The data was collected from Planner5D.

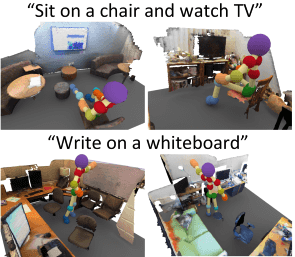

The PiGraphs dataset consists of 26 reconstructed RGBD scans with recordings of people performing common actions and annotated interactions between the people and objects.

The Stanford Scene Database consists of 133 synthetic scenes created by people.

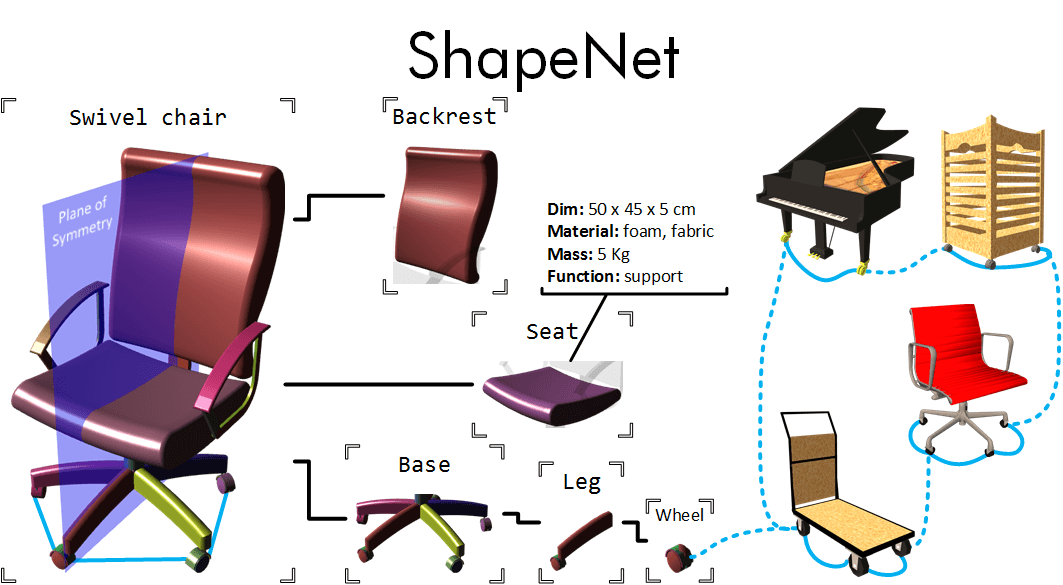

ShapeNet is an ongoing effort to collect and enrich 3D models with semantic information.